This post is about Cache Connection Manager and Cache Transform. The cache connection manager reads data from cache transformation or from a cache files (.caw) and can save the data to a cache file. Using the Cache Connection Manager (CCM) is a new option for the Lookup transform in SSIS 2008. The CCM provides an alternative to doing lookups against a database table using an OLEDB connection. Whether you configure the cache connection manager to use a cache file, the data is always stored in memory. The cache connection manager does not support the Binary large Object (BLOB) data type DT_TEXT, DT_NTEXT and DT_IMAGE.

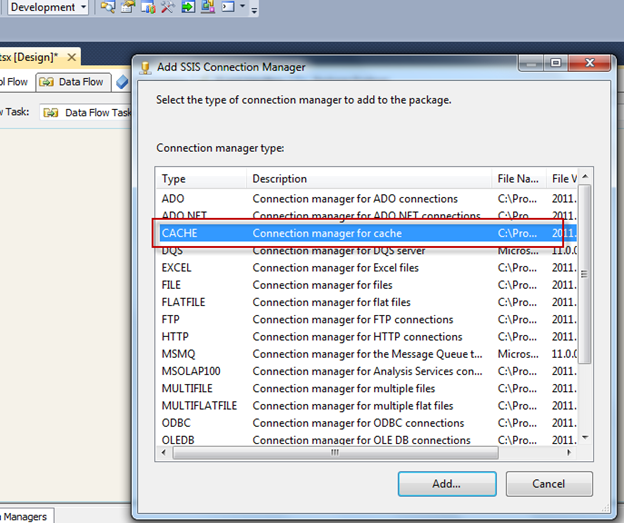

The following images shows that how to create New Cache Connection.

Cache connection can be made by file or in memory.

Option 1: Create cache directly in memory.

Option 2: Create cache use file cache.

Here I am going to demonstrate without use file cache. As I am going to use Product Number as mapping column so that make the index for Product Number as 1, and leave others as default.

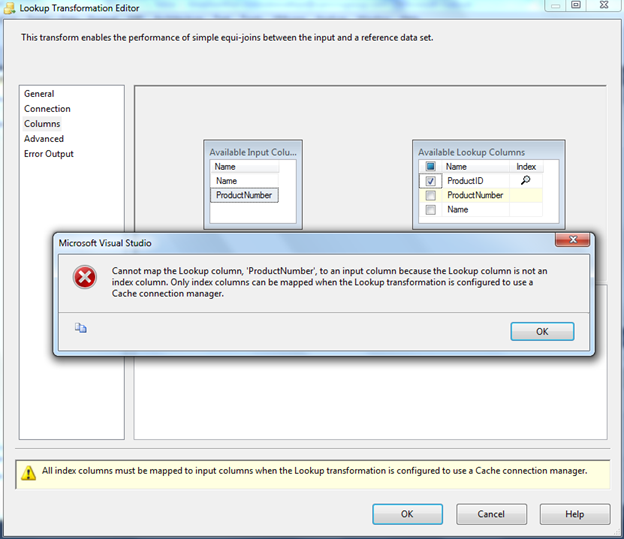

The Index Position column if set to something greater than 0 simply denotes an index, let’s say a key or Primary Key if you wish. But this mapped column should be indexed, otherwise you will get error message as below:

Error

message: Cannot map the Lookup column, 'ProductNumber', to an input column

because the Lookup column is not an index column. Only index columns can be

mapped when the Lookup transformation is configured to use a Cache connection

manager.

You can use any source format such as csv, excel and etc.

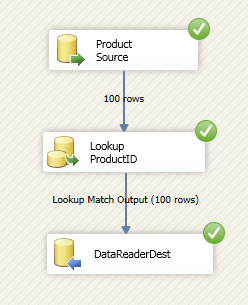

The following images show that how to do the Case Transformation using Cache Transform component. Here I am using OleDBConnection to get the data from table.

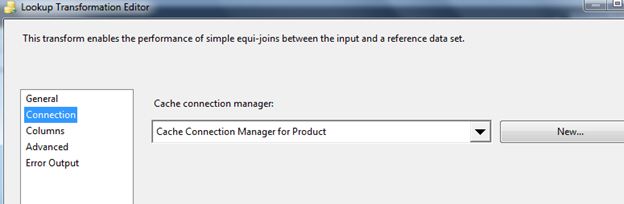

Now let see how to use this case transformation under lookup.

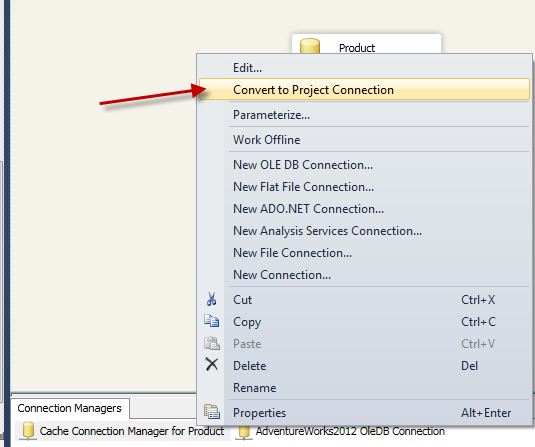

If you want you can use same cache under any package under this project, you need to make it as Cache as Project Connection Manager.

If you want you can use same cache under any package under this project, you need to make it as Cache as Project Connection Manager.

Cheers!

Cheers!

Error message: Cannot map the Lookup column, 'ProductNumber', to an input column because the Lookup column is not an index column. Only index columns can be mapped when the Lookup transformation is configured to use a Cache connection manager.